By Lantao

PolarDB-X is a cloud-native distributed database that allows storage of massive amounts of data and high-concurrency, complex queries. With a shared-nothing architecture that separates storage from compute, the enterprise-level cloud-native database supports horizontal scaling, distributed transactions, and hybrid loads, and provides high availability. It is highly compatible with MySQL and its ecosystems.

PolarDB-X is designed based on a shared-nothing architecture that separates storage from compute. The system consists of five core components: compute node (CN), data node (DN), global meta service (GMS), change data capture (CDC), and columnar node.

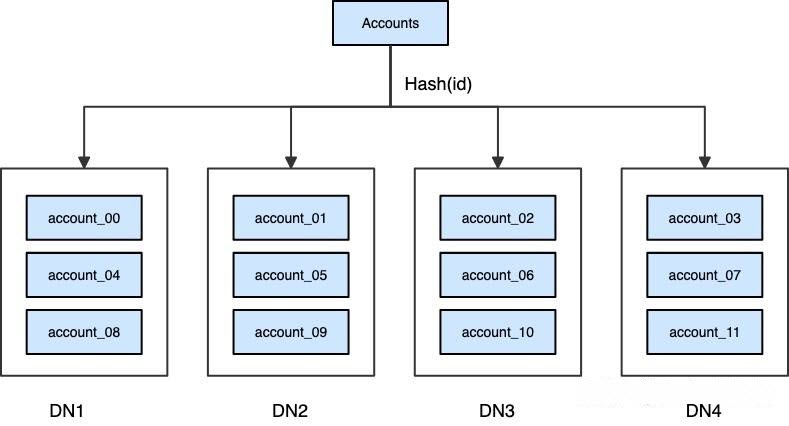

PolarDB-X horizontally partitions data in a table into multiple data nodes. Data is partitioned by using partitioning functions. PolarDB-X supports common partitioning functions such as hash and range.

The following figure provides an example. In the example, the accounts table is horizontally split into 12 partitions from account_00 to account_11 based on the hash value of the ID column of each row of data. The partitions are evenly distributed on four data nodes.

Horizontal partitioning of data is transparent to users. Users can access any data table in the same way as using a standalone database. However, for PolarDB-X itself, due to the horizontal partitions, the data accessed may be located on different data nodes.

Take the transfer scenario as an example. Assume that the user account is a partitioned table, then the transfer-in and the transfer-out parties of the same transaction may be located on different data nodes. For example, the account Alice is located on DN1, and the account Bob is on DN2. In this case, distributed transactions are required to ensure the transfer security.

BEGIN;

UPDATE account SET balance = balance - 20 WHERE name = 'Alice';

UPDATE account SET balance = balance + 20 WHERE name = 'Bob';

COMMIT;The ability to fully support the atomicity, consistency, isolation, and durability (ACID) features of transactions is the core feature of enterprise-level distributed databases. Currently, the mainstream distributed transaction models include the Percolator, Calvin, and XA models. As a mature enterprise-level distributed database, PolarDB-X follows the XA model in the implementation of distributed transactions.

As early as 1998, the OASIS organization formulated the XA spec to regulate the processing of distributed transactions. In the XA spec, there are three types of roles:

• AP (Application Program): Users of database services including applications.

• TM (Transaction Manager): The transaction processing manager, which is responsible for coordinating and managing the execution of transactions.

• RM (Resource Manager): The resource manager, which is responsible for managing and operating specific resources.

The XA spec defines a standard set of interfaces and operations that enable collaboration between TM and RM. The core of the XA spec is the two-phase commit (2PC) protocol. In the 2PC protocol, TM and RM communicate through a series of messages to ensure that all participating RMs perform corresponding operations in a distributed transaction and are eventually either committed or rolled back. In this process, the TM acts as a coordinator, responsible for initiating and managing the execution of the 2PC protocol.

The preceding figure shows a classic and mature two-phase commit algorithm in the distributed database field. Before the specific description, two related concepts and terms are added here:

• GMS (Global Metadata Service): To provide MVCC capabilities, distributed databases need to use GMS to sequence all distributed transactions.

• Transaction branch: A distributed transaction may involve multiple RMs, and each RM may have multiple branch transactions. All branch transactions together constitute a complete distributed transaction.

The entire two-phase commit algorithm is divided into three phases, namely:

1. PREPARE:

The TM issues a PREPARE command to all participating RMs. When all RMs respond to the request and return a success message, the PREPARE phase is completed.

2. GMS sequencing:

The TM accesses the GMS and obtains the global commit number (GCN) to sequence the distributed transaction. It is worth noting that this phase is not necessarily required in the 2PC algorithm. However, global sequencing is still a well-tested and accepted solution for achieving read consistency in the database industry.

3. COMMIT:

The TM sends a COMMIT command to all participating RMs. When all the RMs respond to the request and return a success message, the distributed transaction is committed.

How to efficiently implement distributed transactions directly affects the overall performance of distributed databases. Theoretically, the XA spec is quite complete and clear, but there is still room for discussion in specific engineering practices. From the perspective of distributed databases, the author believes that there are at least two optimization directions:

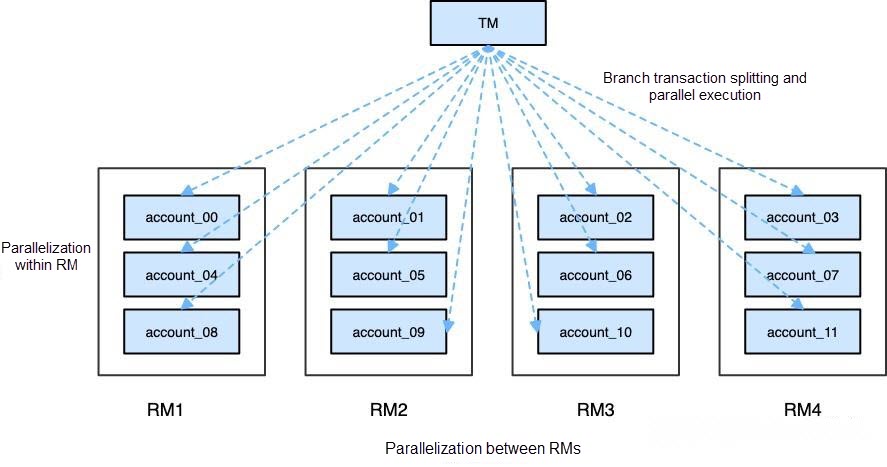

• Maximize parallelization, allowing branch transactions to be parallelly executed across RMs or within a single RM.

• Minimize interactions between different roles (TM, RM, and GMS). Each interaction is an RTT.

The PolarDB-X team has proposed the Transaction Group based on the Lizard transaction system to support a more flexible and efficient implementation of distributed transactions.

Compared with standalone databases, transaction splitting and parallelization are part of the key methods to improve the performance of distributed databases. In a distributed database, branch transactions among RMs can be parallelized without a doubt, but whether the branch transactions within a single RM can be parallelized remains to be discussed. The XA spec does not define the mapping relationship between RM and branch transactions. In actual engineering practice, it is generally believed that branch transactions can coexist in a single RM. However, the implementation of the specific transaction engine is actually divided into two sides:

• One side, represented by Oracle, believes that multiple branch transactions on each RM can share a transaction object.

• The other side, represented by InnoDB, believes that multiple branch transactions on each RM are independent transactions with no relationship.

The author does not intend to discuss the advantages and disadvantages of the two designs here, but in the case of InnoDB, it is feasible to run multiple branch transactions in parallel within a single RM. In the following figure, a company plans to pay allowances to some employees. The entire Account table is split into 12 partitions, which are evenly distributed across four RMs. In this case, the entire distributed transaction can be split into 12 branch transactions, and each branch transaction is responsible for modifying one partition. By performing finer-grained transaction splitting within the RM, the distributed database will be able to obtain a higher level of parallelization, improving the system throughput.

Parallel execution of multiple branch transactions under a single RM can improve performance, but there is also an issue of "write branch transaction isolation". Taking modifying the Accounts table as an example, three branch transactions are initiated at a certain moment:

• Branch transaction a, modifying account_00 on RM.

• Branch transaction b, modifying account_04 on RM.

• Branch transaction c, modifying account_08 on RM.

After the modification is completed (none of the three has been committed), you cannot query the modifications of branch transactions b and c through transaction a. The reason for this problem is that for RM, each branch transaction is independent, and RM is not aware of the relationship between these branch transactions.

Imagine a classic scenario of querying after modification. To ensure query visibility, TM must ensure that the SQL statements with the query range of account00 must be executed by branch transaction a and that the same applies to other ranges. Otherwise, the query cannot see the modified results due to the isolation of transactions, which limits the flexibility of branch transaction parallelization.

In fact, from the perspective of TM, these three branch transactions belong to one distributed transaction and should have the characteristics of a complete transaction. Therefore, it is reasonable for branch transaction a to see the modification of branch transactions b and c.

Is there a way to run branch transaction parallelization without worrying about "write branch transaction isolation"? After careful analysis and discussion, the PolarDB-X team believes that the core cause of the above problem is that a single RM has no awareness of the relationship between branch transactions, and each branch transaction is independent of the other and cannot cooperate effectively.

To this end, on the basis of the XA spec, the Lizard transaction system has proposed Transaction Group, which generalizes branch transactions belonging to the same distributed transaction and establishes the co-belonging relationship of branch transactions within RM. Transaction Group is located between RM and branch transactions and is designed to facilitate the collaboration of multiple branch transactions within a single RM, which is reflected in two aspects:

• On the one hand, multi-branch transactions within a single RM are still independent and can be executed concurrently.

• On the other hand, multi-branch transactions within a single RM belong to the same Transaction Group and can share context to a limited extent.

After the concept of Transaction Group is introduced, the problem mentioned above can be solved. When dealing with the problem of "write branch transaction isolation", you can share visibility between branch transactions through Transaction Group. Branch transactions that belong to the same Transaction Group can view the modifications of other branch transactions, regardless of the snapshot limit when the transaction is started.

In a distributed database, to meet the requirements of MVCC, TM needs to interact with GMS at least twice: the first is when the transaction starts, TM needs to obtain the current maximum GCN from GMS for snapshot reading; the second is during the two-phase commit, it gets the GCNs from GMS for distributed transaction sequencing. Through a simple comparison of GCNs, distributed databases can complete visibility judgment.

Distributed transactions involve interactions among multiple nodes, so they incur higher costs than standalone transactions. This is reflected in two aspects:

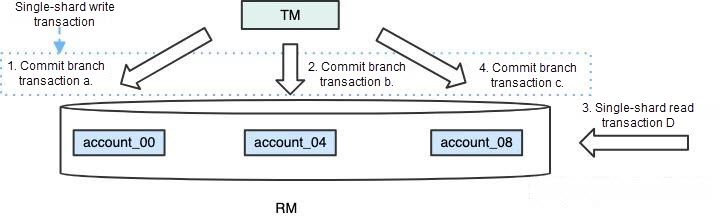

If the interaction between TM and RM or GMS can be reduced, the execution efficiency of distributed transactions will be greatly improved. In actual business scenarios, not all accesses and modifications are across RMs; a considerable number of accesses involve only one RM. PolarDB-X calls this special distributed transaction a single-shard transaction. In this case, the execution process of distributed transactions can be simplified. It should be noted that a single-shard transaction can also have multiple branch transactions, but these branch transactions are all located in an RM. Single-shard transactions can be further divided into single-shard read transactions and single-shard write transactions based on whether modifications are made. The execution process is simplified as follows:

Single-shard transactions can improve the performance of distributed databases, but they also pose challenges. Single-shard transactions under the same RM do not interact with GMS. The visibility between each other is determined internally by the transaction engine. In the existing InnoDB implementation, each branch transaction is an independent transaction, and InnoDB is not aware of the relationship between different branch transactions. The commits of different branches of a single-shard transaction are always in sequence, and other single-shard transactions may see partial commits.

Taking the modification of the Accounts table as an example, a single-shard write transaction E and a single-shard read transaction D are started at a certain time. Details are as follows:

Single-shard write transaction E (with three branches):

Single-shard read transaction D occurs after branch transactions a and b are committed but before branch transaction c is committed. Neither single-shard write transaction E nor single-shard read transaction D interacts with GMS. Therefore, the visibility of the transactions depends on the visibility checks of InnoDB's local transactions. If the isolation level is greater than or equal to read committed, transaction D can see the modifications of branch transactions a and b rather than those of branch transaction c.

In other words, for the AP, it is found that query D sees partial modifications of the write transaction E, but not the other part. This violates the atomicity of the transaction.

"Read query fails to read consistent state" is a special scenario of single-shard transactions in branch transaction parallelization. In essence, it is because multiple branch transactions under a single RM fail to show complete transaction characteristics when they should. The idea of dealing with this issue is similar to that of addressing write branch transaction isolation. You can build a Transaction Group and share the commit status.

Specifically, a single Transaction Group distinguishes between primary branch transactions and secondary branch transactions. There is only one primary branch transaction while the secondary branch transaction can be multiple. The status of the secondary branch transaction is partially determined by the primary branch transaction. Regarding the shared commit status, the commit status of the branch transaction is determined by the primary branch transaction. Once the primary branch transaction is committed, the secondary branch transaction is considered committed (even if it has not been committed in the transaction engine, namely, the transaction status has not been modified).

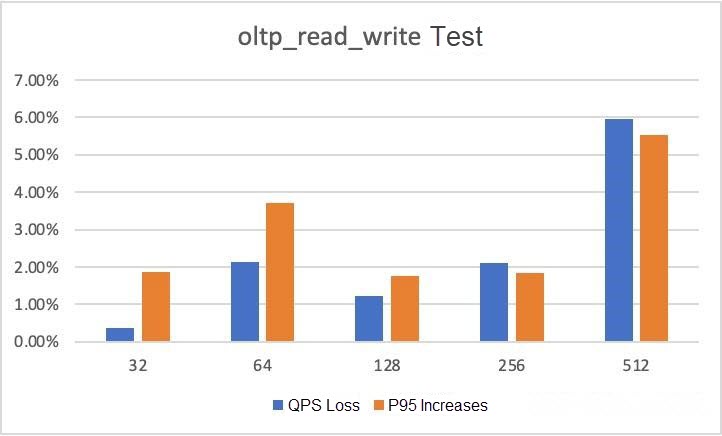

We deployed the polardbx-engine on an Intel 8269CY 104C physical machine. The test data consisted of 16 Sysbench tables, each with 10 million rows. We tested with Transaction Group disabled and enabled. To support testing of XA transactions, we modified Sysbench.

The preceding figure shows the test data of the Transaction Group in the Sysbench oltp_read_write scenario. It can be found that the cost of enabling Transaction Group is extremely low. Even at a high concurrency of 512 threads, there is only a 5.96% decrease in QPS and a 5.54% increase in P95 latency.

Transaction Group is a new exploration of the PolarDB-X team in optimizing distributed transaction implementation, reflecting the deep reflection of the PolarDB-X team on XA spec. In the future, more contexts can be shared through Transaction Group, making branch transaction management in a single RM more flexible. When sharing is not required, parallel capabilities are prioritized and when sharing is required, complete transaction characteristics are presented.

Breaking T-SQL Limitations: Extending ApsaraDB RDS SQL Server Boundaries with CLR Integration

ApsaraDB - March 17, 2025

ApsaraDB - January 23, 2024

ApsaraDB - October 17, 2024

ApsaraDB - October 17, 2024

ApsaraDB - January 17, 2024

ApsaraDB - November 7, 2024

PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More Oracle Database Migration Solution

Oracle Database Migration Solution

Migrate your legacy Oracle databases to Alibaba Cloud to save on long-term costs and take advantage of improved scalability, reliability, robust security, high performance, and cloud-native features.

Learn More Lindorm

Lindorm

Lindorm is an elastic cloud-native database service that supports multiple data models. It is capable of processing various types of data and is compatible with multiple database engine, such as Apache HBase®, Apache Cassandra®, and OpenTSDB.

Learn MoreMore Posts by ApsaraDB