Recently, PolarDB topped the TPC-C benchmark test ranking with a performance that exceeded the previous record by 2.5 times. It set the TPC-C world record for performance and cost-effectiveness with a performance of 2.055 billion transactions per minute (tpmC) and a unit cost of CNY 0.8 (price/tpmC).

Each seemingly simple number contains countless technical personnel's ultimate pursuit of database performance, cost-effectiveness, and stability. The pace of innovation in PolarDB has never stopped. A series of articles on "PolarDB's Technical Secrets of Topping TPC-C" are hereby released to tell you the story behind the "Double First Place". Stay tuned!

This is the fourth article in the series - High Availability - Smooth Switchover.

Previous articles:

TPC-C is a benchmark model issued by the Transaction Processing Performance Council (TPC) specifically designed to evaluate OLTP (Online Transaction Processing) systems. It covers typical database processing paths such as addition, deletion, modification, and query to test the OLTP performance of the database. The final performance metric is measured by tpmC (transaction per minute). The TPC-C benchmark model intuitively evaluates the performance of a database.

In the TPC-C benchmark test, the high availability test stands as a crucial evaluation item. It verifies the transaction processing, data consistency maintaining, and fast recovery capabilities of the database system during failover by simulating node failures, network disconnections, and other scenarios. The test approach includes deploying a multi-node cluster, injecting faults, and monitoring key metrics (such as throughput and latency) to ensure service continuity. Its significance lies in evaluating system disaster recovery capabilities, safeguarding business continuity, optimizing architecture reliability, and enhancing user trust. The test results can be used to quantify the recovery time objective (RTO) and recovery point objective (RPO), guiding system fault tolerance design and O&M.

We use PolarDB for MySQL 8.0.2 for the TPC-C benchmark test. The high availability architecture of PolarDB for MySQL is shown in Figure 1. Within the same zone, VotingDisk enables high-availability detection and failover of compute nodes with second-level RTO and RPO = 0. When it comes to cross-zone scenarios within the same region, you can use X-Paxos integrated and optimized by kernels for strong disaster recovery synchronization of physical logs. For cross-region data synchronization, you can use the global database network (GDN) to efficiently synchronize data.

Figure 1: High availability architecture of PolarDB for MySQL

In the traditional primary/secondary MySQL architecture, primary and secondary nodes generate a copy of disaster recovery data through binlog replication and playback, achieving high availability through data redundancy. Thanks to the cloud-native shared storage architecture of PolarDB for MySQL, the primary node (RW) and the secondary node (RO) share the same data in the same zone, reducing storage costs for high availability to zero. In scenarios where the host and network of a compute node fail, PolarDB implements a failover with hot replica through optimized deep coordination of various cloud-native components. The main technical features include:

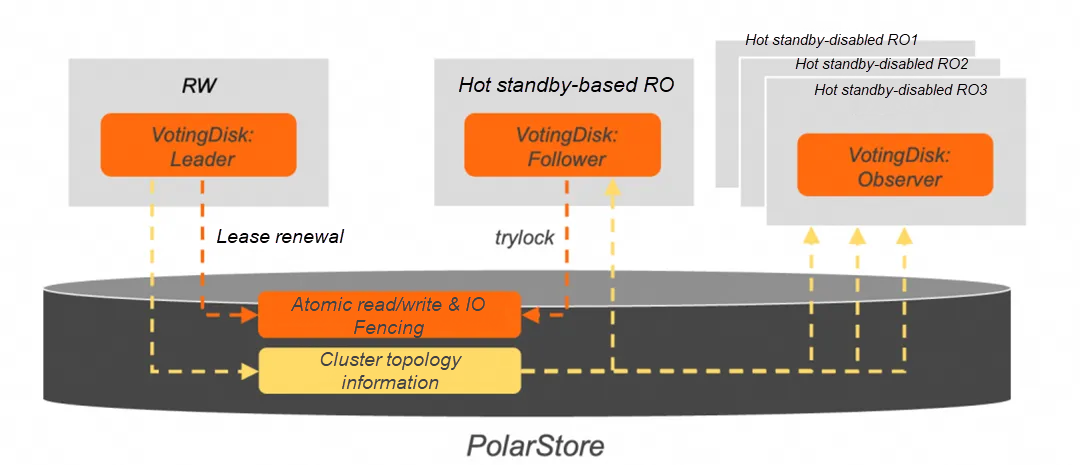

Based on the cloud-native shared storage architecture of PolarDB, compute nodes implement a lease-based distributed lock mechanism through the atomic read/write interface provided by PolarStore. The atomic read/write data blocks record metadata such as lock holder and lease time. The primary node (VD Leader) of PolarDB continuously updates metadata through a lease renewal interface to verify the health status of the lock holder. Read-only (RO) nodes can be classified into two types: hot standby-enabled read-only nodes and hot standby-disabled read-only nodes. The hot standby-enabled read-only node (VD follower) periodically attempts to acquire locks by using trylock semantics to detect faults and select the primary node. The hot standby-disabled read-only node (VD Observer) periodically retrieves the cluster topology information and automatically connects to a new primary node if the primary node changes. Additionally, based on the I/O FENCE interface of the distributed shared storage, the compute node precisely controls write permissions for various types of compute nodes according to the protection scope of the lock, ensuring data security. The entire solution is called Voting Disk.

Figure 2: Architecture of VotingDisk in PolarDB for MySQL

PolarDB directly uses the centralized shared storage of the cloud-native architecture to implement a distributed lock service at the computing layer, eliminating the need for third-party components like ZooKeeper or Redis. Unlike the shared-nothing distributed system, it avoids reliance on consensus protocols such as Paxos for complex majority voting. VotingDisk has the following benefits:

PolarDB uses multi-technology collaboration to achieve high availability and seamless switchover. In addition to read services, the hot standby-enabled read-only node reserves some resources as a global prefetching system. It synchronizes the metadata of the primary node to the memory in real time by using the Buffer Pool, Undo, Redo, and Binlog modules. When a primary/secondary switchover occurs, the secondary node can directly obtain relevant information from memory instead of initiating I/O operations to retrieve information from the storage.

Figure 3: Global prefetching of the hot standby-based read-only node

Traditional primary/secondary switchovers often lead to connection interruptions and transaction rollbacks. For idle connections, PolarDB's persistent connection feature bridges applications and databases through its database proxy (PolarProxy). During switchovers, it preserves session states, such as variables and character sets, to ensure uninterrupted connections.

Figure 4: Persistent connections

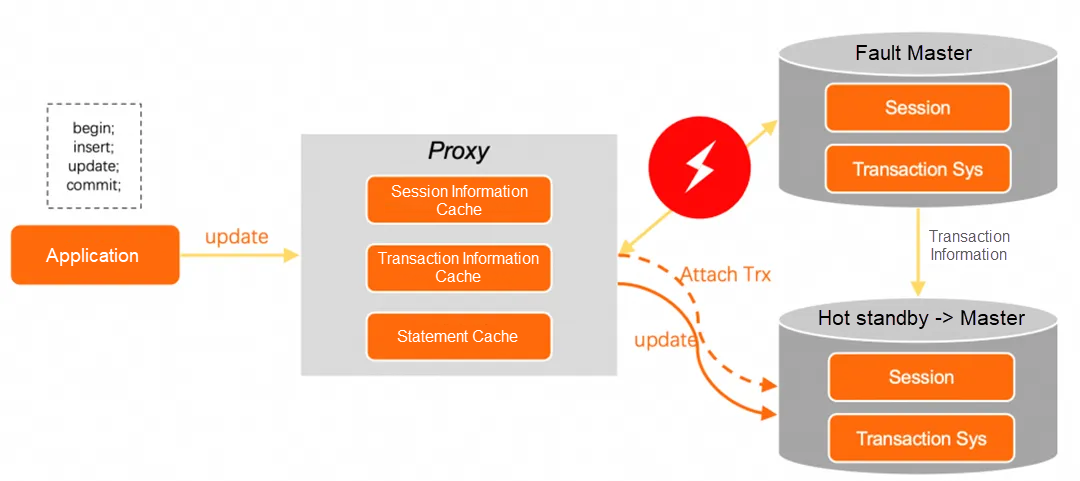

For a session with active transactions, persistent connections become invalid as the proxy cannot store the transaction execution context. PolarDB introduces the transaction resumable upload feature to synchronize transaction contexts between primary and read-only nodes. During a primary/secondary switchover, the proxy queries the transaction context of the corresponding connection on the new primary node and restores the information to the new connection before it can continue to execute the transaction. As a result, the application perceives only latency in operations without connection or transaction errors. Compared with traditional logical replication, PolarDB ensures complete data consistency between primary and secondary nodes based on physical replication. This significantly improves switchover efficiency and reliability. For more details, see Failover with Hot Replica.

Figure 5: Transaction resumable upload of PolarDB for MySQL

PolarDB for MySQL supports the multi-master cluster (Limitless). You can upgrade from PolarDB for MySQL one primary node and multiple read-only nodes to PolarDB for MySQL Multi-master Cluster (Limitless) Edition by adding primary (RW) nodes. Each primary node in a multi-master cluster can be assigned a Voting Disk lease-based distributed lock. It can also be configured with a private read-only node as its secondary node. You can customize the number of read-only nodes in the cluster. In addition to serving as secondary databases, read-only nodes can also provide globally consistent read services during system operation. At a minimum, you can configure zero read-only nodes to allow the primary node to monitor the status of the remaining primary nodes and act as the backup node for them. Once the primary node is unavailable, move the database shard of the faulty node to a normal one. However, it is worth noting that the overall performance of the cluster may be affected under high workloads due to reduced resources.

The sysbench read_write test with 32 concurrent threads is conducted to verify the effect of the primary/secondary switchover. As shown in Figure 6, at the moment of switchover, only an impact of slow queries for over 1 second occurs, with no disconnection reported.

Figure 6: Failover with hot replica

If you need to perform disaster recovery across zones in the same region, PolarDB provides a multi-level disaster recovery system.

Figure 7: Multi-zone disaster recovery in the same region

In the multi-zone deployment in the same region, PolarDB for MySQL provides the following disaster recovery solutions:

1. Dual-zone asynchronous synchronization - peer-to-peer storage:

2. Dual-zone asynchronous synchronization - peer-to-peer computing and storage:

3. Dual-zone semi-synchronization:

4. Multi-zone strong synchronization:

A global database network (GDN) consists of multiple PolarDB clusters that are deployed in multiple regions. Data is synchronized across all clusters in a GDN, which enables geo-disaster recovery. All clusters handle read requests while write requests are handled only by the primary cluster. GDN is ideal for the following scenarios:

If you deploy applications in multiple regions but deploy databases only in the primary region, applications that are not deployed in the primary region must communicate with the databases that may be located in a geographically distant region. This results in high latency and poor performance. GDN replicates data across regions at low latencies and provides cross-region read/write splitting. GDN allows applications to read data from a database local to the region. This allows databases to be accessed within 2 seconds.

GDN supports geo-disaster recovery regardless of whether your applications are deployed in the same region. If a fault occurs in the region where the primary cluster is deployed, you need only to manually switch your service over to a secondary cluster.

Compared with other database services or binlog-based middleware solutions, PolarDB GDN demonstrates significant advantages:

• Low latency: PolarDB GDN synchronizes data based on redo logs. Compared with binlog synchronization, redo logs do not rely on transaction commits and are more efficient for concurrent data page modifications. PolarDB GDN optimizes pipeline and multi-channel replication techniques, significantly reducing cross-region replication latency.

• Low cost: You are not charged for the traffic that is generated during cross-region data transmission within a GDN. You are charged only for the use of PolarDB clusters in the GDN.

• Integrated solution: With kernel-level bidirectional replication, read/write control, and active-active failover, PolarDB GDN eliminates risks and O&M complexity caused by intricate links of third-party tools.

PolarDB GDN also provides additional features to meet diverse requirements for cross-region databases. For example,

• Global domain name: The global domain name feature offers a unified connection address for GDN. You can use the global domain name feature to access the nearest cluster and keep the domain name unchanged after the primary cluster is switched.

• GDN 2.0 proximity write: GDN 2.0 supports multi-write capabilities at the database, table, and partition levels. It controls the write permissions for databases, tables, and partitions to avoid risky write conflicts. Unit sequences are introduced to coordinate auto-increment keys across regions. It also delivers one-click failover at three levels: instance, zone, and region.

Technical Secrets of PolarDB: Cost Optimization - Hardware and Software Collaboration

[Infographic] Highlights | Database New Features in April 2025

ApsaraDB - May 16, 2025

ApsaraDB - May 29, 2025

ApsaraDB - April 9, 2025

ApsaraDB - April 9, 2025

Alibaba Clouder - December 20, 2018

ApsaraDB - March 26, 2025

Application High Availability Service

Application High Availability Service

Application High Available Service is a SaaS-based service that helps you improve the availability of your applications.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn MoreMore Posts by ApsaraDB